Getting Started with AWS Security

TLDR

You have probably read many times that in AWS security is the TOP priority, and as you know there are many resources on the internet. I want to share with you in this article the security basics to improve your AWS solutions by focusing on these 2 resources you have to know:

- Recommendations and best practices: Security Pillar in AWS Well-Architected Framework

- AWS Security checklist

If you’re looking to dive deeper into the broader range of learning materials available on security, including digital courses, blogs, whitepapers, and more, AWS recommends you the Ramp-Up Guide

Security Pillar in AWS Well-Architected Framework

You should start here. This is the official link. I am sure you are familiar with the Well-Architected Framework and the Security Pillar… but have you read the whole thing? I will try to compile the main points for you.

The Security Pillar provides guidance to help you apply best practices, current recommendations in the design, delivery, and maintenance of secure AWS workloads. By adopting this practices you can build architectures that protect your data and systems, control access, and respond automatically to security events.

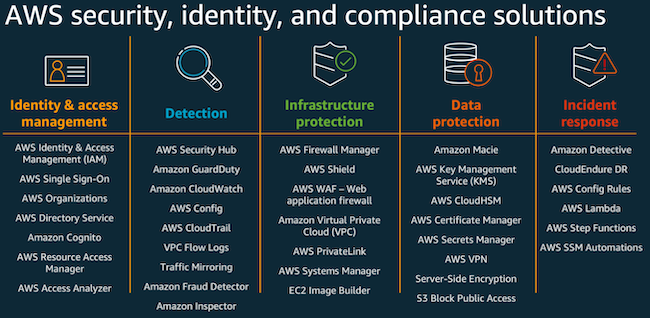

Security in the cloud is composed of six areas:

- Foundations

- Identity and access management

- Detection (logging and monitoring)

- Infrastructure protection

- Data protection

- Incident response

1. Security Foundations

1.1. Design Principles

The security pillar of the Well-Architected Framework captures a set of design principles that turn the security areas into practical guidance that can help you strengthen your workload security.

Where the security epics frame the overall security strategy, these Well-Architected principles describe what you should start doing:

- Implement a strong identity foundation

- Implement the principle of least privilege

- Enforce separation of duties (with appropriate authorization)

- Centralize identity management

- Aim to eliminate reliance on long-term static credentials

- Enable traceability

- Monitor, alert, and audit actions and changes to your environment in real time

- Integrate log and metric collection with systems to automatically investigate and take action

- Apply security at all layers

- Apply a defense in depth approach with multiple security controls

- Apply to all layers (for example, edge of network, VPC, load balancing, every instance and compute service, operating system, application, and code)

- Automate security best practices

- Automated software-based security mechanisms (improve your ability to securely scale more rapidly and cost-effectively)

- Create secure architectures, including the implementation of controls that are defined and managed as code in version-controlled templates

- Protect data in transit and at rest

- Classify your data into sensitivity levels and use mechanisms, such as encryption, tokenization, and access control where appropriate

- Keep people away from data

- Use mechanisms and tools to reduce or eliminate the need for direct access or manual processing of data

- Prepare for security events

- Prepare for an incident by having incident management and investigation policy and processes that align to your organizational requirements

- Run incident response simulations and use tools with automation to increase your speed for detection, investigation, and recovery

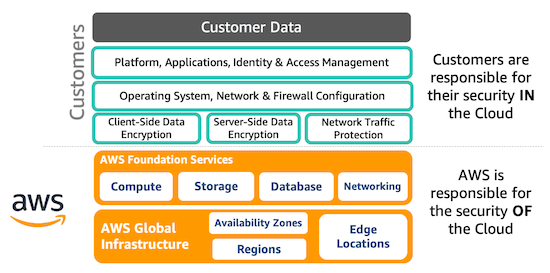

1.2. Shared Responsibility

Security and Compliance is a shared responsibility between AWS and the customer.

More detailed information here

1.3. AWS Account Management and Separation

Best practices for account management and separation:

- Separate workloads using accounts

- Secure AWS account:

- not use the root user

- keep the contact information up to date

- Use AWS Organizations to:

- Manage accounts centrally: automates AWS account creation and management, and control of those accounts after they are created

- Set controls centrally: allows you to use service control policies (SCPs) to apply permission guardrails at the organization, organizational unit, or account level, which apply to all AWS Identity and Access Management (IAM) users and role

- Configure services and resources centrally: helps you configure AWS services that apply to all of your accounts (CloudTrail, AWS Config)

2. Identity and Access Management

Identity and Access Management (IAM) helps customers integrate AWS into their identity management lifecycle, and sources of authentication and authorization.

The best practices for these capabilities fall into two main areas.

- Identity Management

- Permissions Management

2.1. Identity Management

There are two types of identities you will need to manage:

- Human identities: administrators, developers, operators, and consumers of your applications, …

- Machine identities: workload applications, operational tools, components, …

The following are the best practices related to the identities:

- Rely on a centralized identity provider: This makes it easier to manage access across multiple applications and services, because you are creating, managing, and revoking access from a single location

- Federation with individual AWS Account: you can use centralized identities for AWS with a SAML 2.0-based provider with AWS IAM

- For federation to multiple accounts in your AWS Organization, you can configure your identity source in AWS Single Sign-On (AWS SSO)

- For managing end-users or consumers of your workloads, such as a mobile app, you can use Amazon Cognito

- Leverage user groups and attributes: Place users with common security requirements in groups defined by your identity provider, and put mechanisms in place to ensure that user attributes are correct and updated

- Use strong sign-in mechanisms

- Use temporary credentials

- Audit and rotate credentials periodically

- Store and use secrets securely: For credentials that are not IAM-related and cannot take advantage of temporary credentials, such as database logins, use a service that is designed to handle the management of secrets, such as AWS Secrets Manager

2.2. Permission management

Manage permissions to control access to human and machine identities that require access to AWS and your workloads. Permissions control who can access what, and under what conditions.

How to grant access to different types of resources:

- Identity-based policies in IAM (managed or inline): These policies let you specify what that identity can do (its permissions)

- In most cases, you should create your own customer-managed policies following the principle of least privilege

- Resource-based policies are attached to a resource. These policies grant permission to a

principalthat can be in the same account as the resource or in another account - Permissions boundaries: use a managed policy to set the maximum permissions that an administrator can set

- This enables you to delegate the ability to create and manage permissions to developers, such as the creation of an IAM role, but limit the permissions they can grant so that they cannot escalate their permission using what they have created

- Attribute-based access control (ABAC): enables you to grant permissions based on tags (attributes)

- Tags can be attached to IAM principals (users or roles) and AWS resources

- Using IAM policies, administrators can create a reusable policy that applies permissions based on the attributes of the IAM principal

- Organizations service control policies (SCP): define the maximum permissions for account members of an organization or organizational unit (OU). Limit permission but do not grant it

- Session policies: advanced policies that you pass as a parameter when you programmatically create a temporary session for a role or federated user. These policies limit permissions but do not grant permissions

The following are the best practices related to permission management:

- Grant least privilege access

- Define permission guardrails for your organization: You should use AWS Organizations to establish common permission guardrails that restrict access to all identities in your organization. Here are examples of service control policies (SCPs) defined by AWS that you can apply to your organization.

- Analyze public and cross-account access: In AWS, you can grant access to resources in another account. You grant direct cross-account access using policies attached to resources or by allowing identity to assume an IAM role in another account.

- IAM Access Analyzer identifies all access paths to a resource from outside of its account. It reviews resource policies continuously and reports findings of public and cross-account access to make it easy for you to analyze potentially broad access.

- Share resources securely: AWS recommends sharing resources using AWS Resource Access Manager (AWS RAM) because enables you to easily and securely share AWS resources within your AWS Organization and Organizational Units

- Reduce permissions continuously: Maybe in the getting started of a project you chose to grant broad access, but later you should evaluate access continuously and restrict access to only the permissions required and achieve least privilege

- Establish emergency access process: AWS recommends having a process that allows emergency access to your workload, in particular your AWS accounts, in the unlikely event of an automated process or pipeline issue

3. Detection

Detective Control provides guidance to help identify potential security incidents within the AWS environment. Detection consists of two parts:

- Configure

- Investigate

3.1. Configure

- Configure services and application logging

- A foundational practice is to establish a set of detection mechanisms at the account level. This base set of mechanisms is aimed at recording and detecting a wide range of actions on all resources in your account.

- AWS CloudTrail provides the event history of your AWS account activity

- AWS Config monitors and records your AWS resource configurations and allows you to automate the evaluation and remediation against desired configurations

- Amazon GuardDuty is a threat detection service that continuously monitors for malicious activity and unauthorized behavior to protect your AWS accounts and workloads

- AWS Security Hub provides a single place that aggregates, organizes, and prioritizes your security alerts, or findings, from multiple AWS services and optional third-party products to give you a comprehensive view of security alerts and compliance status.

- Many core AWS services provide service-level logging features. For example, Amazon VPC provides VPC Flow Logs

- Amazon CloudWatch Logs can be used to store and analyze logs for EC2 instances and application-based logging that doesn’t originate from AWS services (you will need an agent), and use CloudWatch Logs Insights to process them in real-time or dive into analysis.

- A foundational practice is to establish a set of detection mechanisms at the account level. This base set of mechanisms is aimed at recording and detecting a wide range of actions on all resources in your account.

- Analyze logs, findings, and metrics centrally: A best practice is to deeply integrate the flow of security events and findings into a notification and workflow system.

- GuardDuty and Security Hub provides aggregation, deduplication, and analysis mechanisms for log records that are also made available to you via other AWS services.

3.2. Investigate

- Implement actionable security events: For each detective mechanism you have, you should also have a process, in the form of a runbook or playbook, to investigate

- Automate response to events:

- In AWS, investigating events of interest and information on potentially unexpected changes in an automated workflow can be achieved using Amazon EventBridge

- Amazon GuardDuty also allows you to route events to a workflow system for those building incident response systems (Step Functions), to a central Security Account, or to a bucket for further analysis.

- Detecting change and routing this information to the correct workflow can also be accomplished using AWS Config Rules and Conformance Packs.

- Conformance packs are a collection of Config Rules and remediation actions you deploy as a single entity authored as a YAML template. A sample conformance pack template is available for the Well-Architected Security Pillar

4. Infrastructure Protection

Infrastructure protection ensures that systems and resources within your workloads are protected against unintended and unauthorized access, and other potential vulnerabilities.

You need to be familiar with Regions, Availability Zones, AWS Local Zones, and AWS Outposts.

4.1. Protecting Networks

When you follow the principle of applying security at all layers, you employ a Zero Trust approach (application components don’t trust any other).

- Create network layers: Components that share reachability requirements can be segmented into layers formed by subnets.

- Control traffic at all layers: You should examine the connectivity requirements of each component. In a VPC (region level), the subnets are in an Availability Zone with Network ACLs and route tables associated, and inside of subnets, you include the use of security groups (stateful inspection firewall).

- Some AWS services require components to access the internet for making API calls, where AWS API endpoints are located.

- Other AWS services use VPC endpoints within your Amazon VPCs.

- Many AWS services, including Amazon S3 and Amazon DynamoDB, support VPC endpoints, and this technology has been generalized in AWS PrivateLink. AWS recommends you use this approach to access AWS services, third-party services, and your own services hosted in other VPCs securely because all network traffic on AWS PrivateLink stays on the global AWS backbone and never traverses the internet. Connectivity can only be initiated by the consumer of the service, and not by the provider of the service.

- Implement inspection and protection: Inspect and filter your traffic at each layer.

- You can inspect your VPC configurations for potential unintended access using VPC Network Access Analyzer.

- For components transacting over HTTP-based protocols, a web application firewall, AWS WAF, can help protect from common attacks. AWS WAF lets you monitor and block HTTP(s) requests that match your configurable rules that are forwarded to an Amazon API Gateway API, Amazon CloudFront, or an Application Load Balancer.

- For managing AWS WAF, AWS Shield Advanced protection, and Amazon VPC security groups across AWS Organizations, you can use AWS Firewall Manager.

- It allows you to centrally configure and manage firewall rules across your accounts and applications, making it easier to scale enforcement of common rules.

- It also enables you to rapidly respond to attacks, using AWS Shield Advanced, or solutions that can automatically block unwanted requests to your web applications.

- Firewall Manager also works with AWS Network Firewall, a managed service that uses a rules engine to give you fine-grained control over both stateful and stateless network traffic.

- Automate network protection: Automate protection mechanisms to provide a self-defending network based on threat intelligence and anomaly detection.

- For example, intrusion detection and prevention tools can adapt to current threats and reduce their impact.

- A web application firewall is an example of where you can automate network protection, for example, by using the AWS WAF Security Automations solution (https://github.com/awslabs/aws-waf-security-automations ) to automatically block requests originating from IP addresses associated with known threat actors.

4.2. Protecting Compute

Compute resources include EC2 instances, containers, AWS Lambda functions, database services, IoT devices, and more. Each of these compute resource types requires different approaches to secure them. However, they do share common strategies that you need to consider:

- Perform vulnerability management: Frequently scan and patch for vulnerabilities in your code, dependencies, and in your infrastructure to help protect against new threats.

- Automate the creation of infrastructure with CloudFormation and create secure-by-default infrastructure templates verified with CloudFormation Guard

- For patch management, use AWS System Manager Patch Manager

- Reduce attack surface: Reduce your exposure to unintended access by hardening operating systems and minimizing the components, libraries, and externally consumable services in use.

- Reduce unused components

- In EC2 you can create your own AMIs simplifying the process with EC2 Image Builder. When using containers implement ECR Image Scanning

- Using third-party static code analysis tools, you can identify common security issues. You can use Amazon CodeGuru for supported languages. Dependency-checking tools can also be used to determine whether libraries your code links against are the latest versions, are free of CVEs, and have licensing conditions that meet your software policy requirements.

- Using Amazon Inspector, you can perform configuration assessments against your instances for known common vulnerabilities and exposures (CVEs), assess against security benchmarks and automate the notification of defects

- Enable people to perform actions at a distance: Removing the ability for interactive access reduces the risk of human error and the potential for manual configuration or management.

- For example, use a change management workflow to manage EC2 instances using tools such as AWS Systems Manager instead of allowing direct access, or via a bastion host.

- AWS CloudFormation stacks build from pipelines and can automate your infrastructure deployment and management tasks without using the AWS Management Console or APIs directly.

- Implement managed services: Implement services that manage resources, such as Amazon RDS, AWS Lambda, and Amazon ECS, to reduce your security maintenance tasks as part of the shared responsibility model. This means you have more free time to focus on securing your application

- Validate software integrity: Implement mechanisms (e.g. code signing) to validate that the software, code, and libraries used in the workload are from trusted sources and have not been tampered with. You can use AWS Signer

- Automate compute protection: Automate your protective compute mechanisms including vulnerability management, reduction in attack surface, and management of resources. The automation will help you invest time in securing other aspects of your workload, and reduce the risk of human error.

5. Data protection

Before architecting any workload, foundational practices that influence security should be in place:

- data classification provides a way to categorize organizational data based on criticality and sensitivity in order to help you determine appropriate protection and retention controls

- encryption protects data by way of rendering it unintelligible to unauthorized access

These methods are important because they support objectives such as preventing mishandling or complying with regulatory obligations.

5.1. Data Classification

- Identify the data within your workload: You need to understand the type and classification of data your workload is processing, the associated business processes, the data owner, applicable legal and compliance requirements, where it’s stored, and the resulting controls that need to be enforced.

- Define data protection controls: By using resource tags, separate AWS accounts per sensitivity, IAM policies, Organizations SCPs, AWS KMS, and AWS CloudHSM, you can define and implement your policies for data classification and protection with encryption.

- Define data lifecycle management: Your defined lifecycle strategy should be based on sensitivity level as well as legal and organizational requirements. Aspects including the duration for which you retain data, data destruction processes, data access management, data transformation, and data sharing should be considered.

- Automate identification and classification: Automating the identification and classification of data can help you implement the correct controls. Using automation for this instead of direct access from a person reduces the risk of human error and exposure. You should evaluate using a tool, such as Amazon Macie, that uses machine learning to automatically discover, classify, and protect sensitive data in AWS.

5.2. Protecting data at rest

Data at rest represents any data that you persist in non-volatile storage for any duration in your workload. This includes block storage, object storage, databases, archives, IoT devices, and any other storage medium on which data is persisted. Protecting your data at rest reduces the risk of unauthorized access when encryption and appropriate access controls are implemented.

Encryption and tokenization are two important but distinct data protection schemes.

- Tokenization is a process that allows you to define a token to represent an otherwise sensitive piece of information.

- Encryption is a way of transforming content in a manner that makes it unreadable without a secret key necessary to decrypt the content back into plaintext.

Best practices:

- Implement secure key management: By defining an encryption approach that includes the storage, rotation, and access control of keys, you can help protect your content against unauthorized users and unnecessary exposure to authorized users.

- AWS KMS helps you manage encryption keys and integrates with many AWS services. This service provides durable, secure, and redundant storage for your AWS KMS keys.

- AWS CloudHSM is a cloud-based hardware security module (HSM) that enables you to easily generate and use your own encryption keys in the AWS Cloud.

- Enforce encryption at rest: You should ensure that the only way to store data is by using encryption. You can use AWS Managed Config Rules to check automatically that you are using encryption, for example, for EBS volumes, RDS instances, and S3 buckets.

- Enforce access control: Different controls including access (using least privilege), backups (see Reliability whitepaper), isolation, and versioning can all help protect your data at rest.

- Access to your data should be audited using detective mechanisms like CloudTrail and service level log

- You should inventory what data is publicly accessible, and plan for how you can reduce the amount of data available over time. Amazon S3 Glacier Vault Lock and S3 Object Lock are capabilities providing mandatory access control—once a vault policy is locked with the compliance option, not even the root user can change it until the lock expires

- Audit the use of encryption keys: Ensure that you understand and audit the use of encryption keys to validate that the access control mechanisms on the keys are appropriately implemented. For example, any AWS service using an AWS KMS key logs each use in AWS CloudTrail. You can then query AWS CloudTrail, by using a tool such as Amazon CloudWatch Insights, to ensure that all uses of your keys are valid.

- Use mechanisms to keep people away from data: Keep all users away from directly accessing sensitive data and systems under normal operational circumstances.

- For example, use a change management workflow to manage EC2 instances using tools instead of allowing direct access or a bastion host. This can be achieved using AWS Systems Manager Automation, which uses automation documents that contain steps you use to perform tasks. These documents can be stored in source control, peer-reviewed before running, and tested thoroughly to minimize risk compared to shell access.

- Business users could have a dashboard instead of direct access to a data store to run queries.

- Where CI/CD pipelines are not used, determine which controls and processes are required to adequately provide a normally disabled break-glass access mechanism.

- Automate data at rest protection: Use automated tools to validate and enforce data at rest controls continuously, for example, verify that there are only encrypted storage resources.

- You can automate validation that all EBS volumes are encrypted using AWS Config Rules.

- AWS Security Hub can also verify several different controls through automated checks against security standards. Additionally, your AWS Config Rules can automatically remediate non-compliant resources.

5.3. Protecting data in transit

Data in transit is any data that is sent from one system to another. This includes communication between resources within your workload as well as communication between other services and your end-users. By providing the appropriate level of protection for your data in transit, you protect the confidentiality and integrity of your workload’s data.

Best practices:

- Implement secure key and certificate management: Store encryption keys and certificates securely and rotate them at appropriate time intervals with strict access control. The best way to accomplish this is to use a managed service, such as AWS Certificate Manager (ACM). It lets you easily provision, manage, and deploy public and private Transport Layer Security (TLS) certificates for use with AWS services and your internal connected resources.

- Enforce encryption in transit: AWS services provide HTTPS endpoints using TLS for communication, thus providing encryption in transit when communicating with the AWS APIs.

- Insecure protocols, such as HTTP, can be audited and blocked in a VPC through the use of security groups.

- HTTP requests can also be automatically redirected to HTTPS in Amazon CloudFront or on an Application Load Balancer.

- Additionally, you can use VPN connectivity into your VPC from an external network to facilitate the encryption of traffic. Third-party solutions are available in the AWS Marketplace if you have special requirements.

- Authenticate network communications: Using network protocols (TLS/IPsec) that support authentication allows for trust to be established between the parties adding encryption to reduce the risk of communications being altered or intercepted.

- Automate detection of unintended data access: Use tools such as

- Amazon GuardDuty automatically detects suspicious activity or attempts to move data outside of defined boundaries.

- Amazon VPC Flow Logs to capture network traffic information can be used with Amazon EventBridge to trigger the detection of abnormal connections–both successful and denied.

- S3 Access Analyzer can help assess what data is accessible to who in your S3 buckets.

- Secure data from between VPC or on-premises locations: You can use AWS PrivateLink to create a secure and private network connection between Amazon Virtual Private Cloud (Amazon VPC) or on-premises connectivity to services hosted in AWS.

- You can access AWS services, third-party services, and services in other AWS accounts as if they were on your private network.

- With AWS PrivateLink, you can access services across accounts with overlapping IP CIDRs without needing an Internet Gateway or NAT.

- You also do not have to configure firewall rules, path definitions, or route tables.

- Traffic stays on the Amazon backbone and doesn’t traverse the internet, therefore your data is protected.

6. Incident response

Incident Response helps customers define and execute a response to security incidents.

6.1. Design Goals of Cloud Response

- Establish response objectives: Some common goals include containing and mitigating the issue, recovering the affected resources, and preserving data for forensics, and attribution.

- Document plans: Create plans to help you respond to, communicate during, and recover from an incident.

- Respond using the cloud

- Know what you have and what you need: Preserve logs, snapshots, and other evidence by copying them to a centralized security cloud account.

- Use redeployment mechanisms: when possible, and make your response mechanisms safe to execute more than once and in environments in an unknown state.

- Automate where possible: As you see issues or incidents repeat and build mechanisms that programmatically triage and respond to common situations. Use human responses for unique, new, and sensitive incidents.

- Choose scalable solutions: reduce the time between detection and response.

- Learn and improve your process: When you identify gaps in your process, tools, or people, and implement plans to fix them. Simulations are safe methods to find gaps and improve processes.

In AWS, there are several different approaches you can use when addressing incident response.

- Educate your security operations and incident response staff about cloud technologies and how your organization intends to use them.

- Development Skills: programming, source control, version control, CI/CD processes

- AWS Services: security services

- Application Awareness

- The best way to learn is hands-on, through running incident response game days

- Prepare your incident response team

- to detect and respond to incidents in the cloud,

- enable detective capabilities,

- and ensure appropriate access to the necessary tools and cloud services.

- Additionally, prepare the necessary runbooks, both manual and automated, to ensure reliable and consistent responses.

- Work with other teams to establish expected baseline operations, and use that knowledge to identify deviations from those normal operations.

- Simulate both expected and unexpected security events within your cloud environment to understand the effectiveness of your preparation.

- Iterate on the outcome of your simulation to improve the scale of your response posture, reduce time to value, and further reduce risk.

AWS Security checklist

This is a whitepaper of AWS that provides customer recommendations that align with the Well-Architected Framework Security Pillar. It is available here

1. Identity and Access Management

- Secure your AWS Account

- Use AWS Organizations

- Use the root user with MFA

- Configure account contacts

- Rely on centralized identity provider

- Centralize identities using either AWS Single Sign-On or a third-party provider to avoid routinely creating IAM users or using long-term access keys—this approach makes it easier to manage multiple AWS accounts and federated applications

- Use multiple AWS accounts

- Use of Service Control Policies to implement guardrails

- AWS Control Tower can help you easily set up and govern a multi-account AWS environment

- Store and use secrets securely

- Use AWS Secrets Manager if you cannot use temporary credentials

2. Detection

- Enable foundational services for all AWS accounts

- AWS CloudTrail to log API activity

- Amazon GuardDuty for continuous monitoring

- AWS Security Hub for a comprehensive view of your security posture

- Configure service and application-level logging

- In addition to your application logs, enable logging at the service level, such as Amazon VPC Flow Logs and Amazon S3, CloudTrail, and Elastic Load Balancer access logging, to gain visibility into events

- Configure logs to flow to a central account, and protect them from manipulation or deletion

- Configure monitoring and alerts, and investigate events

- Enable AWS Config to track the history of resources

- Enable Config Managed Rules to automatically alert or remediate undesired changes

- Configure alerts for all your sources of logs and events, from AWS CloudTrail to Amazon GuardDuty and your application logs, for high-priority events and investigate

3. Infrastructure Protection

- Patch your operating system, applications, and code

- Use AWS Systems Manager Patch Manager to automate the patching process of all systems and code for which you are responsible, including your OS, applications, and code dependencies

- Implement distributed denial-of-service (DDoS) protection for your internet-facing resources

- Use Amazon Cloudfront, AWS WAF and AWS Shield to provide layer 7 and layer 3/layer 4 DDoS protection

- Control access using VPC Security Groups and subnet layers

- Use security groups for controlling inbound and outbound traffic, and automatically apply rules for both security groups and WAFs using AWS Firewall Manager

- Group different resources into different subnets to create routing layers, for example, database resources do not need a route to the internet

4. Data protection

- Protect data at rest

- Use AWS Key Management Service (KMS) to protect data at rest across a wide range of AWS services and your applications

- Enable default encryption for Amazon EBS volumes, and Amazon S3 buckets

- Encrypt data in transit

- Enable encryption for all network traffic, including Transport Layer Security (TLS) for web-based network infrastructure you control using AWS Certificate Manager to manage and provision certificates

- Use mechanisms to keep people away from data

- Keep all users away from directly accessing sensitive data and systems. For example, provide an Amazon QuickSight dashboard to business users instead of direct access to a database, and perform actions at a distance using AWS Systems Manager automation documents and Run Command

5. Incident response

- Ensure you have an incident response (IR) plan

- Begin your IR plan by building runbooks to respond to unexpected events in your workload

- Make sure that someone is notified to take action on critical findings

- Begin with GuardDuty findings. Turn on GuardDuty and ensure that someone with the ability to take action receives the notifications. Automatically creating trouble tickets is the best way to ensure that GuardDuty findings are integrated with your operational processes

- Practice responding to events

- Simulate and practice incident response by running regular game days, incorporating the lessons learned into your incident management plans, and continuously improving them